H2O.ai Releases H2O4GPU, the Fastest Collection of GPU Algorithms on the Market, to Expedite Machine Learning in Python | H2O.ai

machine learning - How to make custom code in python utilize GPU while using Pytorch tensors and matrice functions - Stack Overflow

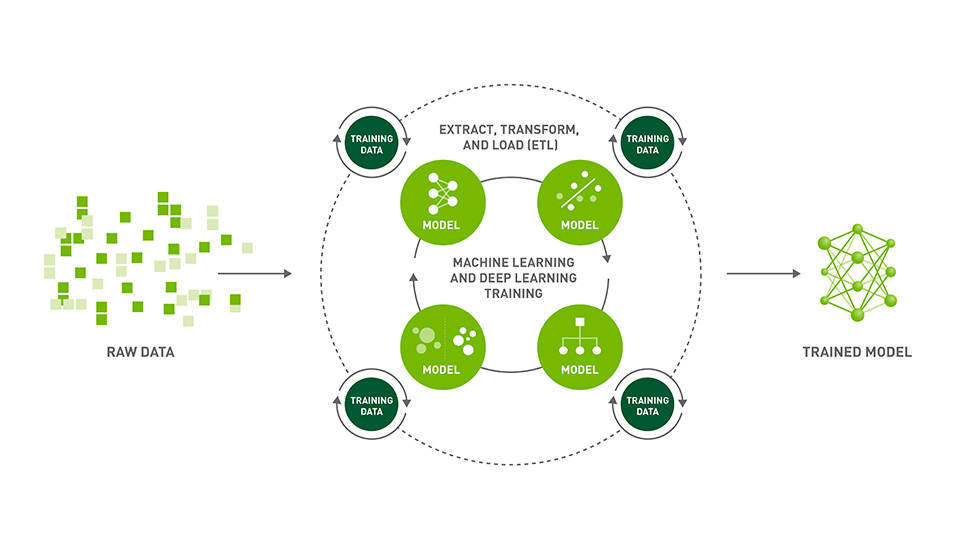

RAPIDS is an open source effort to support and grow the ecosystem of... | Download Scientific Diagram

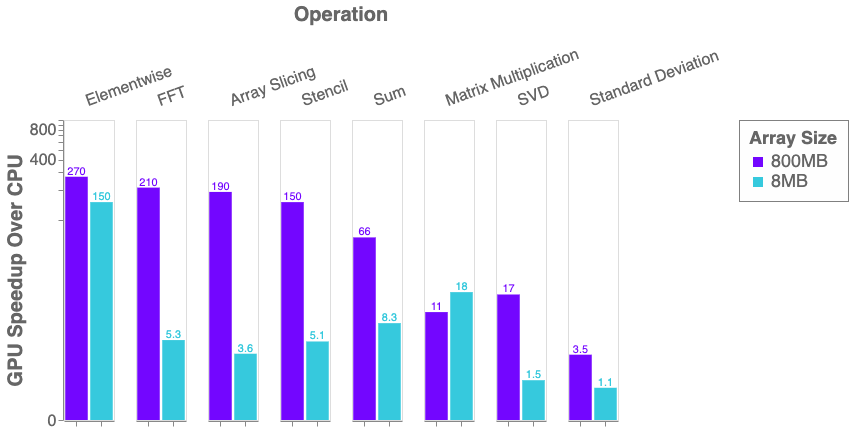

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

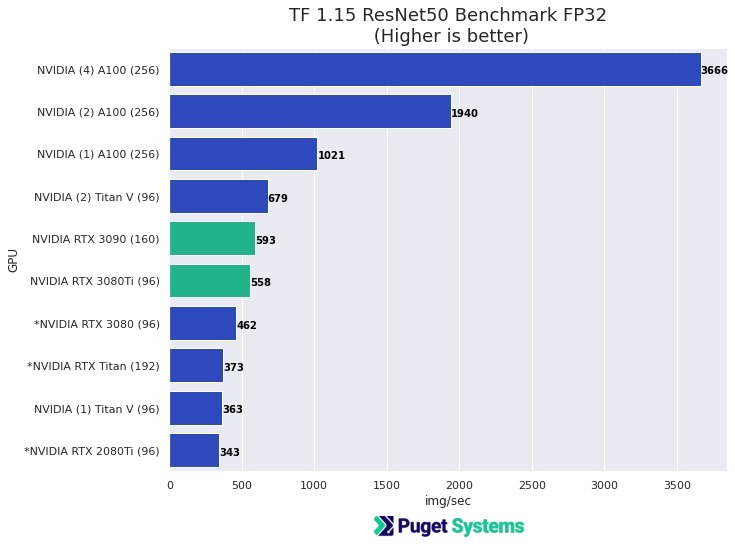

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science

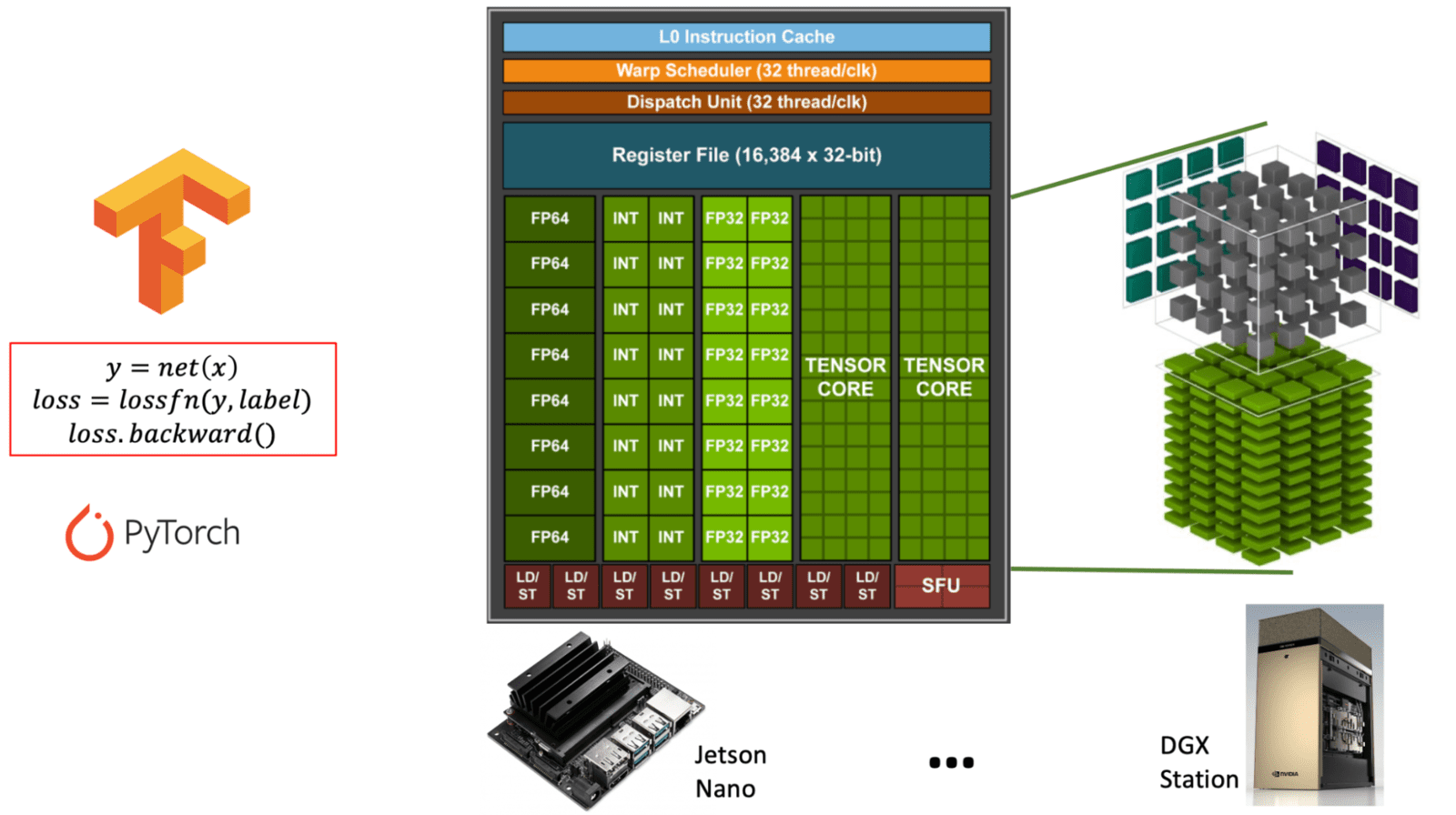

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers